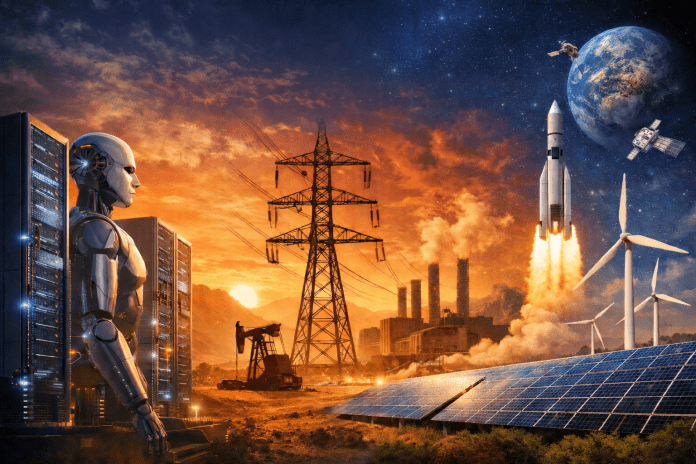

Artificial intelligence is growing at an extraordinary pace. Models are getting larger. Inference is becoming constant. AI systems are now embedded in core business operations. However, beneath this rapid growth lies a clear constraint: energy.

Leaders at companies such as NVIDIA and Google openly state that AI’s future depends not only on chips and software but also on reliable power infrastructure. In other words, the next decade of AI will depend as much on electricity as on innovation.

AI’s Rising Energy Demand:

Training advanced AI models requires massive computing clusters that run continuously for weeks. At the same time, enterprises now deploy AI systems that operate around the clock. As a result, energy demand no longer comes in spikes. Instead, it remains constant.

According to the International Energy Agency, electricity use from data centers is expected to increase sharply this decade. AI workloads will drive a significant share of that growth. In fact, some hyperscale data centers already consume as much power as small cities.

Consequently, several regions in the United States, Europe, and Asia are beginning to face grid capacity pressure.

The Infrastructure Bottleneck:

Unlike earlier digital shifts, AI expansion requires physical scale. Companies must build more data centers, install advanced cooling systems, and secure stable grid connections. Therefore, infrastructure planning has become a strategic priority.

In the UK, National Grid has reported rising connection requests from data center operators. Similarly, U.S. states such as Virginia and Texas are accelerating energy planning processes to meet AI-driven demand.

This trend shows that the constraint is no longer theoretical. It is operational.

The Search for Stable Power Sources:

Renewable energy remains central to corporate sustainability goals. Companies such as Microsoft and Google continue to invest heavily in wind and solar projects. Nevertheless, renewable sources alone cannot guarantee constant uptime.

Because AI clusters require uninterrupted power, organizations are now evaluating additional solutions. These include advanced nuclear technologies, small modular reactors, geothermal energy, and co-located generation near data centers.

As a result, energy strategy now sits at the center of AI strategy.

The Geopolitical Shift:

Access to affordable and stable electricity is quickly becoming a competitive advantage. Countries that expand grid capacity faster will attract more AI infrastructure investment. Conversely, regions with slow approvals or limited generation may fall behind.

For this reason, energy security increasingly overlaps with compute security. The nations that scale both will shape the next phase of global AI leadership.

Implications for Business Leaders:

For enterprises, the AI energy challenge creates direct strategic decisions. Leaders must consider infrastructure location, long-term power contracts, regulatory exposure, and sustainability commitments.

Moreover, organizations should measure the energy intensity of their AI workloads alongside performance metrics. Those that plan early will reduce risk and protect margins.

Ultimately, the defining question is not only who builds the most advanced AI models. It is who can power them reliably, sustainably, and at scale.

Read Next: https://mediablizz.com/why-tech-giants-are-looking-to-space-for-the-future-of-ai-data-centers/